Gravity Probe β

Jul 10, 2022

The modeling, lighting and compositing pipeline. Click for the live demo hosted on Shadertoy.

This project recreates a photo from NASA’s gravity probe experiment entirely in code. The shader contains a physically based path tracer alongside an embedded wavelet image decompressor which decodes a compressed photo of Fields Medalist Maryam Mirzakhani. Physically-based lighting, rendering and post-processing effects are then applied to reproduce the look of the original photo. For a more in-depth look at why I chose this scene, check out the first part of my (unfinished) blog post about the project.

Image Decompression

Embedding Maryam’s photo into the code of the shader meant finding an efficient compression algorithm that could shrink the image down to a small fraction of its original size. Given the cost of writing highly recursive code, I decided that the compressed image should take up no more than a few kilobytes of memory if I was to avoid run-away compile times.

While there are many ways to compress an image, the most effective algorithms all apply a stack of techniques in succession in order to achieve the highest compression ratios. By far the most well-known and ubiquitous file format in use today is the humble JPEG as introduced by the Joint Photographic Experts Group in 1992. JPEG breaks up each image into small tiles called macroblocks which are decomposed and quantised before being compressed. Its successor format, JPEG-2000, uses wavelet transforms in addition to more advanced coding and quantisation techniques to achieve better compression for the same visual quality.

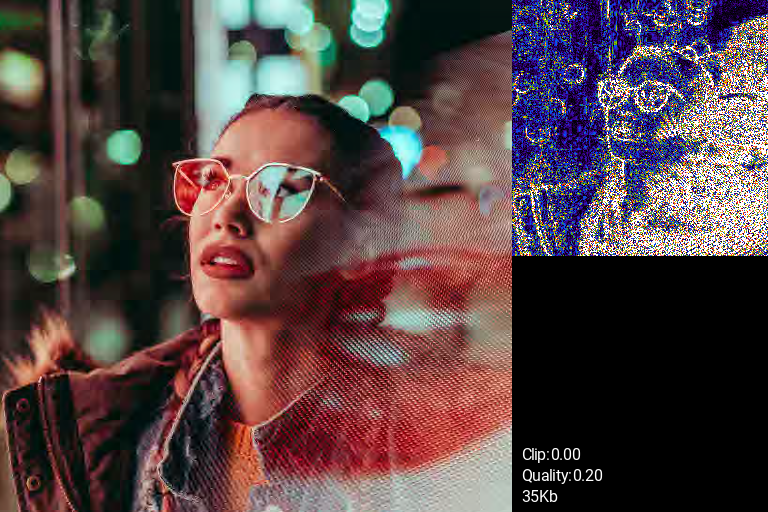

An early test of the codec on a colour image using a macroblock encoding scheme. Also shown is a false-colour map of the reconstruction error together with the selected quality threshold and resulting output file size. Original photo by Angelos Michalopoulos.

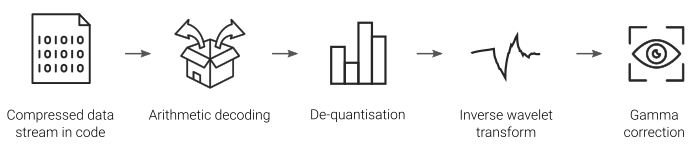

After considering various options, I settled on a design that was loosely based on JPEG-2000. To compress an image, the 2D grid of pixels is first decomposed into its frequency-space representation using the Cohen–Daubechies–Feauveau wavelet transform. Next, the transformed data are quantised and truncated, reducing numerical precision and zeroing out as many of the coefficient as possible. Finally, the quantised data stream is compressed using a fixed-model arithmetic coder. To decompress the image, the same set of steps are followed in reverse and using each technique’s associated inverse.

Though not as efficient as the original JPEG specifications, my approach was still able to reduce the size of the uncompressed image by approximately 93%.

Rendering

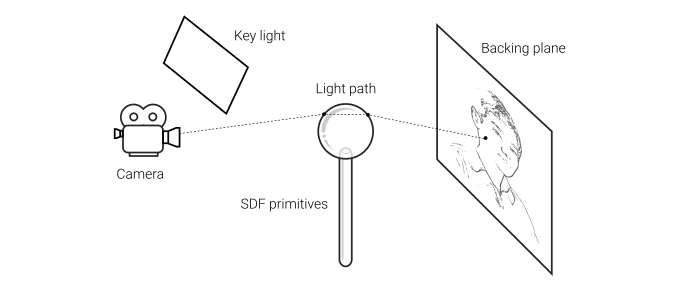

The elegance and simplicity of NASA’s gravity probe image made it the perfect candidate for the highly constrained environment of a shader demo. The scene itself can be modeled using just 4 primitives: a parametric sphere representing the quartz gyroscope, a tube representing the acrylic plinth, a plane onto which the photo of Maryam is projected, and another which is directly sampled as light.

Unlike spheres and planes which can be tested analytically, finding the intersection between a ray and tube is more complicated. A ray can intersect a genus-1 shape up to four times, which means finding the roots of a quartic polynomial. To avoid such a complex and expensive operation, I opted instead to use a signed distance function (SDF) which is both simpler to implement and more versatile to work with.

Tracing SDFs requires recursively marching along each ray to locate its isosurface. Despite there being a loop involved, this operation is still relatively fast given that the function describes the minimum distance to its own isosurface at each marching step. Moreover, primitives whose functions exhibit Lipschitz continuity can be traced more rapidly thanks to the useful properties of their derivatives.

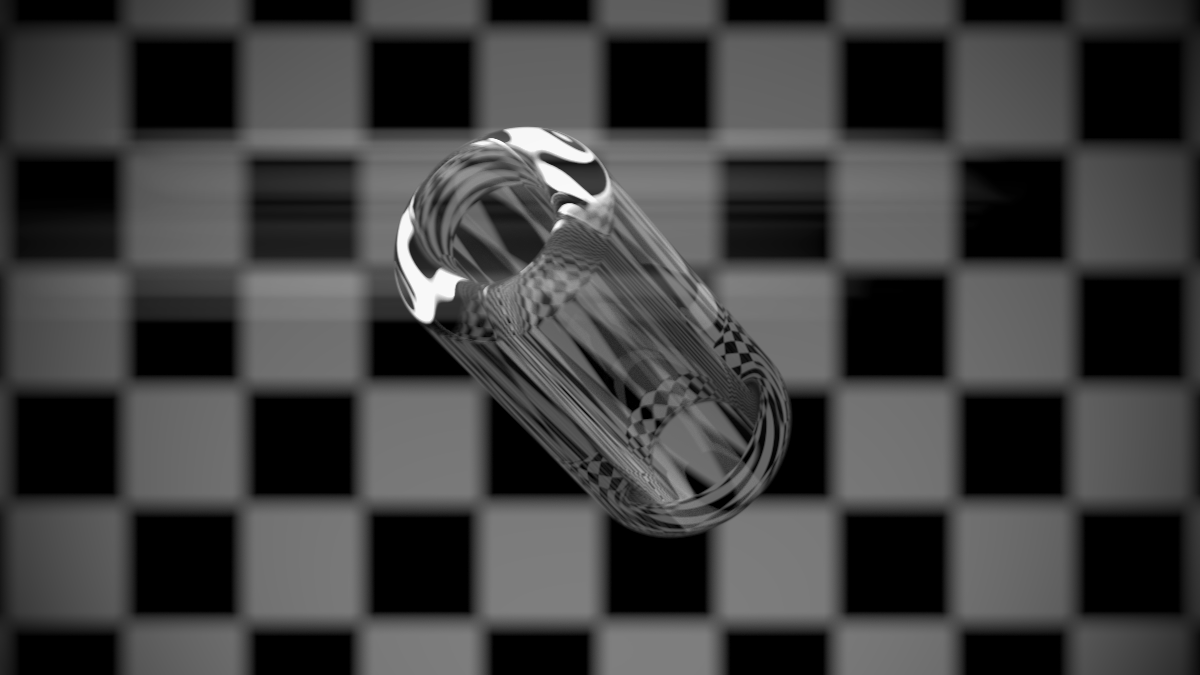

The acrylic plinth modeled as a swept signed distance function and rendered as a specular dielectric.

For the rendering itself, I implemented a progressive spectral path tracer. Rays are seeded using a thin-lens camera model before being iteratively traced throughout the scene. Unlike most renderers which permit rays to branch at surface intersections, the limitations of the GLSL compiler meant that it was necessary to probabilistically select between each component of the rendering integral one at a time. This brings compile time down at the cost of increased estimator variance and slower convergence.

Compositing

Given that NASA’s original gyroscope image is a photo of a photo, grading the output from the render was important to achieve the proper look. After experimenting with tables of values measured from physical film stock, I resorted instead to fitting a set of curves to colours sampled from the original photo. This yielded a compact mapping between the grey-scale photo of Maryam to the sepia-toned palette of the NASA photo.

NASA's original photo of one of the quartz gyroscopes from the Gravity Probe B project.

To further break up the optically perfect output from the path tracer, I added a number of post-processing artifacts that are commonly encountered when using analogue cameras and lenses. A small amount of Gaussian noise mimicked the grain caused by silver nitrate particles in the film stock. In addition, subtle vignetting and a wide anti-aliasing kernel effectively reproduced the imperfections of an old compound lens.

While lighting the scene, I found it continually challenging to avoid blowing out the highlights that appeared on the rim of the gyroscope. Rather than simply clamping the values down, I decided instead to make them a feature of the image by adding a small amount of artificial bloom. Though this effect isn’t present in the original photo, it adds a nice embellishment by drawing the eye towards the gyroscope as it sits upon its plinth.